Next time you go to the doctor, remember to bring your genome card.

Next time you go to the doctor, remember to bring your genome card.

What, you don’t have one?

Of course you don’t. Wallet-sized cards containing a person’s genetic code don’t exist.

Yet they were envisioned in a 1996 Los Angeles Times article, which predicted that by 2020 the makeup of a person’s genome would drive their medical care.

That idea that today we’d be basking in the fruits of ultra-personalized medicine was put forth by scientists who were promoting the Human Genome Project — a massive, publicly-funded international research effort.

Scientists knowingly overpromised the medical benefits of sequencing the human genome, and journalists went along with the narrative, according to Ari Berkowitz, a biology professor at the University of Oklahoma, who wrote about the hype around genome sequencing in the December issue of the Journal of Neurogenetics.

He pointed to “incentives for both biologists and journalists to tell simple stories, including the idea of relatively simple genetic causation of common, debilitating disease.”

Lately the allure of a simple story thwarts public understanding of another technology that’s grabbed the spotlight in the wake of the genetic data boom: artificial intelligence (AI).

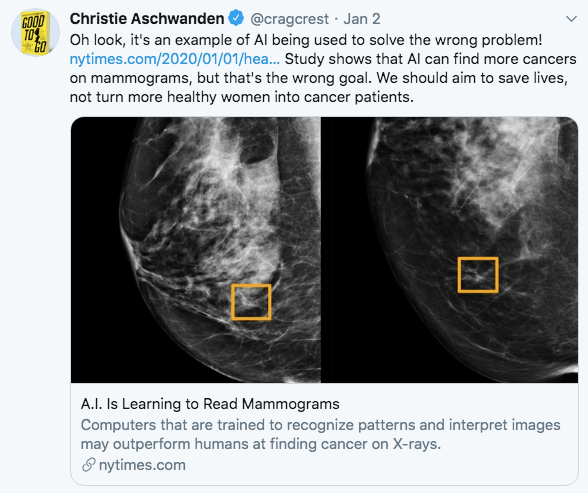

With AI, headlines often focus on the ability of machines to “beat” doctors at finding disease. Take coverage of a study published this month on a Google algorithm for reading mammograms:

CNBC: Google’s DeepMind A.I. beats doctors in breast cancer screening trial

ABC: AI-powered computer ‘outperformed’ humans spotting breast cancer in mammograms: Study

HealthDay: AI beats humans in spotting breast tumors

Time: Google’s AI Bested Doctors in Detecting Breast Cancer in Mammograms

The human-versus-machine motif is rooted in IBM’s gimmicky Deep Blue versus Garry Kasparov chess matches of the 1990s and the 2011 Watson match-up against two “Jeopardy!” champions.

But health care is not a game. Some experts wish news organizations would quit framing AI stories that way.

Headlines that suggest machines are competing against doctors are “just dumb,” said cardiologist Eric Topol, executive vice president of Scripps Research in La Jolla, Calif. “It’s not about either-or. I mean, you’re not going to leave critical diagnoses without oversight.”

Hugh Harvey, a radiologist and managing director at digital consulting firm Hardian Health, agreed headlines should be “more carefully worded,” and caveats should appear at least in the first paragraph of a story. “There’s an ethics to it, I think. As a journalist you want to tell the truth, not just the positive aspects of things.”

Questions that weren’t explored

As some commenters on Twitter pointed out, the Google study was based on the software’s ability to detect cancers on mammograms that had already been identified by a radiologist and biopsied. It didn’t measure whether the machine could achieve an important goal — helping doctors to distinguish between lesions that would benefit from treatment and those that would not.

Christie Aschwanden’s piece in Wired, Artificial Intelligence Makes Bad Medicine Even Worse, said AI has the “potential to worsen pre-existing problems such as overtesting, overdiagnosis, and overtreatment.”

But that point didn’t make it into most news coverage.

While HealthDay and ABC included caveats in their stories and emphasized the need for further study, CNBC and Time did not. One news outlet, Vox, tempered the hype in its headline: AI can now outperform doctors at detecting breast cancer. Here’s why it won’t replace them. Vox included plenty of cautions and caveats, but they came near the end.

Further, none of those news outlets explored the potential financial cost of implementing an AI product for breast cancer detection.

The rhetoric of machines taking over

The rhetoric of machines replacing radiologists has been fomented not by medical experts but by the tech sector. Sun Microsystems co-founder Vinod Khosla asserted in 2012 that machines will replace doctors. A computer science professor and AI expert, Geoffrey Hinton, declared in 2016 that it was “quite obvious that we should stop training radiologists.”

That idea may have pumped up investors but could ultimately hurt patients. At least anecdotally, Harvey said, some young doctors are eschewing the field of radiology in the UK, where there is a shortage.

Harvey drew chuckles during a speech at the Radiological Society of North American in December when he presented a slide showing that while about 400 AI companies has sprung up in the last five years, the number of radiologists who have lost their jobs stands at zero.

(Medium ran Harvey’s defiant explanation of why radiologists won’t easily be nudged aside by computers.)

How good is the evidence?

The human-versus-machine fixation distracts from questions of whether AI will benefit patients or save money.

We’ve often written about the pitfalls of reporting on drugs that have only been studied in mice. Yet some news stories don’t point out when an AI application has yet to be tested in real-world settings in randomized clinical trials. Almost always, a computer’s “deep learning” ability is trained and tested on cleaned-up datasets that don’t necessarily predict how they’ll perform in actual patients.

Harvey said there’s a downside to headlines “overstating the capabilities of the technology before it’s been proven.”

“I think patients who read this stuff can get confused. They think, if AI is so good why can’t I get it now? They might worry about whether a doctor is any good.”

There are also the long-term risk of making the public skeptical about technology. “It puts people off. They don’t appreciate that it can be useful,” said Saurabh Jha, MD, associate professor of radiology at the Hospital of the University of Pennsylvania.

The weakness of AI evidence has been highlighted in some news coverage, such as Liz Szabo’s Kaiser Health News story, A Reality Check On Artificial Intelligence: Are Health Care Claims. In Undark, Jeremy Hsu reported on the lack of evidence for a triaging app, Babylon Health.

Distracting from health care’s real problems

Harvey said journalists also need to point out “the reality of what it takes to get it into the market and into the hands of end users.”

He cites lung cancer screening, for which some stories cover “how good the algorithm is at finding lung cancers and not much else.” For example, a story that appeared in the New York Post (headline: “Google’s new AI is better at detecting lung cancer than doctors”) declared that “AI is proving itself to be an incredible tool for improving lives” without presenting any evidence.

“What (such coverage) doesn’t talk about is the change in lung screening pathways that would need to be implemented across wide geographic populations,” Harvey said. “In order for someone to attend for a lung screening they have to be aware that screening is available. They have to be vetted to make sure they are appropriate for the screening process. They have to have support nurses for when cancers are found, and they have to have treatment pathways available to them. It’s not just, ‘I can find lung cancer.’ ”

A JAMA editorial by physicians Robert Wachter and Ezekiel Emanuel threw cold water on the idea that better analytics will transform health care. Rather, they said, a “narrow focus on data and analytics will distract the health system” from steps to change the “structure, culture, and incentives” that govern how doctors and patients behave.

For example, while it might seem “logical” for AI to predict which patients will skip their medication, it’s unlikely to fix the problem, they write. “Medicine needs to change how physicians and other clinicians interact with non-adherent patients and change patients’ medication-taking habits.”

Journalists have to get educated

Back in the 1990s, Berkowitz wrote, scientists understood that diseases rarely can be attributed to one gene, or even a group of genes. Yet that fact didn’t inform prevailing news coverage.

We’ve often cited the need for journalists to tap independent sources when reporting on medical interventions. Berkowitz said journalists would also do well to bone up on the underlying science.

“That may entail reading journal review articles as well as closely reading original research articles announcing new and exciting findings,” he said. “I know that’s not a satisfying answer, particularly because journalists are often facing a deadline and just don’t have the time or the scientific background, but I don’t see a better way.”